Tune in to this episode for valuable advice based on questions asked by listeners like you.

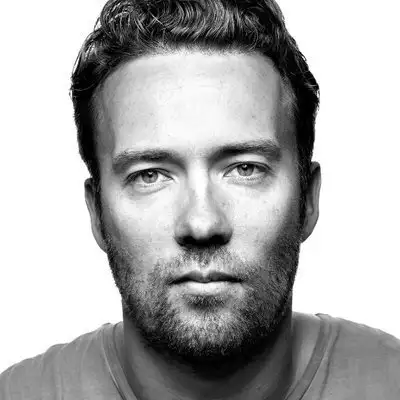

Creators & Guests

What is Rework?

A podcast by 37signals about the better way to work and run your business. Hosted by Kimberly Rhodes, the Rework podcast features the co-founders of 37signals (the makers of Basecamp and Hey), Jason Fried and David Heinemeier Hansson sharing their unique perspective on business and entrepreneurship.

Kimberly (00:00):

Welcome to Rework, a podcast by 37signals about the better way to work and run your business. I'm your host, Kimberly Rhodes. It's been just a couple of months since we've answered listener questions, but we've gotten a few in recently about some recent episodes. So we thought we would just dive right in and answer those timely. These have come from LinkedIn, Twitter, YouTube, voicemails and emails. So guys, let's jump right in. The first one about the two person teams episode, Kenneth wrote in on LinkedIn and said "I love small teams. Although a concern often raised for teams smaller than four is how to be resilient to vacation sickness and people leaving. Do you have an answer for that?"

Jason (00:42):

Yeah. Vacation for example is, is worked out ahead of time. People kind of know, cause we only do six-week cycles so people know if they're gonna be gone and they work it out with their team and they'll often catch up ahead of time. So it might be like, hey, this person's out for a week so let's get some of the stuff that they're primarily focused on, done early. Let's say it's some design piece or something. Let's just get that outta the way. Programmer can kind of work on that plus some other stuff while they're gone. You just kind of, it's a, you just massage the problem. It's like what do we, I don't know, let's figure it out. So you work that out sickness that happens, nothing you can do about that. And the idea that someone just sitting on the bench waiting to fill in for somebody when they're gone for three days, like you wouldn't have that anyway.

(01:21):

So you just work around it. Most of it's just working around the realities and focusing on other things that don't necessarily need that other person right now getting ahead of some work and just making it work. And you know, for the last decade or so, since we've been doing the Shape Up style work, it just hasn't been a problem. Um, one of the, the harder things is sabbaticals, which people are gone for a full month and you just kinda again work around it. You go, well we just have to wait a month, not a big deal, uh, to get this person's contribution, so what else can we do in the meantime? You just sort of kind of flow with it and float with it. So it should not stop anything. It shouldn't grind anything to a halt. It's just a different approach to dealing with time.

David (02:02):

I think a key point here is that when we talk about two-person teams, we're talking about two-person feature teams, someone working on something new. We're not talking, generally speaking, about a two person product team. So if something comes up on Basecamp or something comes up on HEY, there's another team that can slot in to deal with that. There's not like we just have a single team that owns an entire product and if they're out, well too bad. Nothing can rescue that project if it needs anything. We have multiple teams on Basecamp for example, we usually have about three teams. On HEY we've been running with two teams on our infrastructure team, we have five, six people. So there's always that flex to give. But then also as Jason says, the important thing is to realize that this is all time boxed.

(02:52):

So the maximum is six weeks and what we say is, what will get cut if it won't fit is scope. So what sometimes does happen is someone is out sick for a week and now that appetite that was six weeks is now five weeks, no biggie. We just cut a little extra. Or I mean if you're out a lot, we'll delay or whatever, but quite often you can actually make it up just on scope. Scope is this magical flexible bubble of energy that you can squeeze into different size containers and I think this is actually the most important part of Shape Up is to change your mindset from estimates – how long does it take to get exactly this – to here's an appetite – we'd like to spend about this long, getting something that addresses this problem.

Kimberly (03:41):

Okay. And like you're saying, when if someone leaves, that's not something you're really planning for anyway. You just figure it out.

Jason (03:47):

Yeah. Oh yeah, there's that too, of course. If that happens, that happens. Also I should say during the planning phase, during the pitch phase where we go to the betting table and bring these ideas, we know what we know ahead of time so we know if someone's gonna be out of town. So we don't overload a team with more work than they can handle. So we we're kind of thinking about who do we have? That's kinda one of the first questions we actually get to, not just what do we have to consider, but who do we have to work on this? Okay, we got four programmers, three designers, this designer's only here for two outta the six weeks. So we've got like two and three quarter designers really and you kind of work that early on as best you can. But yeah, in flight things where people leave or there's sickness, you just deal with it as you go.

Kimberly (04:24):

Okay, this next question came on YouTube and David, this was posted from one of the video snippets that we did, um, where you were saying you have to trust your people and people usually rise to the occasion when you trust your team. So someone wrote in and said, "any prerequisites for people that indeed can be trusted? We're a remote work company since 2007 and built trust into our company culture. It has occasionally led to some team members taking advantage of our good nature."

David (04:51):

I think that's the table stakes. That's the bet you make and you always have to look at the opposite side of that bet. What have you made the opposite bet? What have you made the bet that we don't trust our people. We will have to verify everything that they do and double check it. How much would that cost? It's not about an ultimate solution. It's about a set of tradeoffs. And the tradeoffs of trust are really good when you're working with people for the long term. In fact, in my optics, there is no other bet that makes any form of sense. If you are working with creative people, you have to trust them. Now they may occasionally from your perspective break that trust by taking advantage of certain things. I'd say you could look at some of the policies we've had and look at some instances over the years where an observer could come to that conclusion or you could choose a different conclusion.

(05:42):

You could choose to look at something that at an initial glance looks like someone's taking advantage of a policy and just go like, you know what? We weren't clear enough. We were not clear enough where the boundaries were. We could have been a little bit more explicit. And you know what? Even if someone did take advantage of something in some small sense, just correct it right there. What's the price of that? It's very rare that you have a setup in such a way that anyone can take advantage of you to the extent that this is gonna be a terminal, serious multiple months great financial wound that's inflicted upon you. That's just not how it happens. For example, some companies run, you can take as much vacation as you want. Now we actually did that for a while. We came to the conclusion that it wasn't a great idea, not because people took advantage of it, more the opposite.

(06:32):

They did not take advantage of it. And that comes to the social mores around like, am I taking too much? But let's say someone did take advantage of it, right? First of all, how do you take advantage of an unlimited policy, right? Like there's something there that says self-critique here of the regime that you've set up. You set unlimited but actually you meant seven weeks. Well maybe you should just say seven weeks then if eight weeks is taking advantage of something and seven weeks is just right, just be clear. Just be explicit. And I think that's often where a lot of these issues come up is when you're trying to be too cute. When you're trying to say unlimited, but you really mean seven. Don't say unlimited. Just back off from that. Just be clear in your policy, default to trust deal with the very few occasions where that doesn't come up.

(07:20):

And if someone repeatedly breaks your trust, you have the ultimate lever here as an employer, in a employ at-will state, which in the US that's generally how it is. That person no longer works at your company. Okay? That happens. It happens for all sorts of other reasons besides trust. You could hire someone who's simply not good enough at their job and you also have to let them go. I would say that over the 20 years plus that we've run on this method of default to trust, I can think of maybe two episodes and that would really even be squeezing what you could say someone took advantage of something. And the advantage was usually in small sums of money that we didn't miss in the end. Contrast that to if we had defaulted on the other side, checking up on everything, verifying everything, whatever. Over 20 years, if we just spent a thousand dollars a month on that, that's 12 grand a year. That's uh, 120 a decade. That's a quarter of a million over 20 years. Contrast to what someone taking advantage of $500 on whatever? Bad bet.

Kimberly (08:30):

David always doing the, the number, multiplication there.

Jason (08:35):

You can't see on the other side. He's got a massive calculator. Mind control, no, um, yeah, I have nothing else to add. That's a really good answer. And it just, it feels like the right side to take cuz you kind of do have to sort of take sides. Do you trust or do you not? Now we also have this thing called the trust battery, which is sort of on an individual basis. People come in to your company at about what 50% is typically what we talk about, which is like they've gotta earn some more trust, but they're also given a bunch of trust upfront. Um, and this is more about really about self-driving work and some other things. It's not like we don't, we think you're gonna steal from us. It's, it's not that kind of thing, but you're at about 50% and then you earn, you lose, and this is actually with each individual person.

(09:20):

So my trust battery with someone might be at 75%. David might only have a 35% because he's had a couple bad experiences with somebody or it could be reverse or whatever it is. And so it's, it's an individual thing. There's also to some degree an organizational trust battery here as well. But uh, this is another concept. This was from, uh, Toby I think at Shopify. I think this was his, or maybe he got it from someone else. But we really think it's a good, it's a good thing to consider as well. When you're thinking about talking to somebody and and wondering why you do or don't like what they're doing. Like you should first look at like what's happened in the past that would cause me to have this lens on this person? And typically it's, it's some break of trust or some earning of trust.

David (10:02):

Now one small point I'd add here is that the trust needs to be proportionate. You shouldn't trust in the most general literal sense, someone to take down your entire site the day they start. That's just bad policy. That's not really about trust per se. But for example, one way we incorporate that principle into new hires at 37signals is with the mentorship model. That's particularly on the programming side. Programmers deploy new versions of base camp and hay and if those versions are bad, the whole system can go down. You don't wanna put that trust, it's actually a punishment to put that trust on someone from day one. They're not ready for that. They don't know how all the systems work. They need someone else to help them verify the work. So you have to be careful with the trust lens. Um, to me I look at it as, would that person actually appreciate the trust? Do they feel like they're ready to say deploy a new version of the app? If so, don't make them cut a bunch of red tape to get through to push the work that they're doing out. But at the same time, help them build that trust in themselves before they're given sort of organizational wide trust to perhaps make a costly mistake.

Kimberly (11:23):

Okay, our next question is a voicemail.

Listener (11:27):

Hello Kimberly, Jason and David, I would love to hear more about how QA works at uh, 37signals. There's a lot of talk about your development and two-man teams, but you never referenced QA or testing in there and um, and you've, you mentioned you do have a QA person, uh, and also with some outside help. How does that work? Is the, is the two-man team primarily responsible for functional testing and your QA teams doing regression or edge case or how does that all come together? Uh, especially within the, the Shape Up paradigm in the six week cycles? Thanks

Jason (12:12):

David. You wanna take that? Sure. Since there's some technical questions in there in some ways

David (12:16):

. So we've actually recently changed how we do QA in the sense that we went from one QA person to two QA people and in the process of doing that we stopped using the external vendor we've been using for quite a few years. And that though still leaves us with the same setup as before. QA is a way for product teams to build confidence that what they ship will work. Now that's quite different from having QA as a gate that you have to pass in order to be able to deploy. We use QA testing as a way to build confidence that the stuff we're pushing out works, which is not always needed. Some things we do are small enough or inconsequential enough or low criticality enough that they don't need a QA step to be pushed out. Now most of the big things we do, they absolutely do need those things.

(13:12):

An example of this was when we launched a card table feature in Basecamp. That was a major new feature. Uh, it had a web component, it had native mobile components. It was uh, quite a big machinery and, and quite a few things to to test. But what's interesting about that case is actually it was a new feature, which to some extent places it on a lower threshold in terms of criticality than if you're mucking around with something existing. When we launched Card Table there was zero customer data in it. So that's just one example of the criticality. We think of the worst thing for us is to lose someone's data. That's even worse than like the app being down. If you lose someone's data you are really denting the trust and you generally speaking only get to do it once, if at that. They'll stop being customers.

(14:00):

But the process otherwise is that the QA team floats. As we have features that are getting towards the end of their development cycle, they'll usually put something on the QA uh, team's Card Table. It's like hey, we'd like to uh, get some eyes on this. The QA team will swoop in, start doing what we call black box testing. That is, they don't know the implementation, they're not diving into the code. They're doing the testing as a user would, which is actually one of those key benefits of having an external QA team not be part of the development team itself is that they don't come with all the assumptions that the development team built in. The thing about developers and even designers who've been working on something is that they are in the happy path mode. They will test the things as their mental model of the feature that they built allows them to see the world.

(14:54):

The QA team comes in with a completely blank fresh slate so they're able to poke at the feature in all the ways that users will poke at the feature that the designer and the programmer didn't consider. So it is really helpful for that and it helps us catch errors early on. But you could even ask why are we even doing that? Cuz we actually did not have a QA team for many years. I think Michael cycled over to be on QA maybe in 2010 or 11 or something that is six years in and five products into the life cycle of this business. At that time we were already doing many millions of dollars in revenue and we did not have general QA. Why? Because if we made mistakes, customers would catch 'em and they would write to us and it wouldn't be a big deal.

(15:40):

We'd push something out, it wasn't right. 10 people would write us in. Now we're at a scale, if we pushed something out like Card Table and there's a major obvious flaw that a bunch of people find, 500 people will write in. That's why we do the QA upfront. So we don't have to answer 500 people. Oh yeah, sorry, we know about it, we're on it. Right? But you don't actually need it early on. I would not add a QA team or person to the company in its very early stages. QA should be something that you can deal with as the developers, as the designers are already there, as customer feedback come in. Let them find it, again, within reason. If you're dropping people's data, you're not gonna have a chance to build up many customers because they will simply flee. But overall speaking, that's how I like to think of it.

(16:27):

It's a function of building confidence when the consequences of getting it wrong are high enough that the trade off is simply worth it. Now the other factor here is we've just built better software. So I think there's something nice to it in that sense, but I've also seen other organizations where this relationship becomes dysfunctional very quickly. You have two sides and you have developers or product teams who think of throwing things over the wall that they don't actually have to care about quality cuz that's someone else's problem. Someone else will find all the issues. That's not how we run the show here you are responsible for your own quality and the QA team is there to find the edge cases not the other way around.

Kimberly (17:06):

And David correct me if I'm wrong, I mean my very limited programmer knowledge, but I feel like I see in these check-ins that programmers are also checking each other's work and not just relying on QA. Is that kind of the case as well?

David (17:18):

That's a great point. QA, sort of like marketing, sort of like a lot of things is everyone's job. I do QA all the time. I find issues, I write a report, I log it, I follow up. And so do we during development when um, you have someone reviewing your code, we do code reviews for anything, I was about to say substantial, but the bar's even lower than that. Anything that's more than just a little bit whatever that is, um, you'll have someone else come in and take a look at the work. When it's someone junior, someone who just joined the company, every piece of work is checked by a mentor. This is part of the model that we've set up to get people leveling up quicker and to ensure that the quality is right when it goes out. But that level of checking is at the per code line level. It is usually less about sort of the black box testing. So these things do go hand in hand. I would say virtually always we have someone reviewing the code itself and then occasionally and on the bigger things and the higher criticality we have the QA team sweep in.

Kimberly (18:26):

Okay. Um, another question about our two-person teams. This was an email from Justice. Hi Jason and David, big fan here of your books, both your products and Ruby on Rails. I understand how you're allocating up to six weeks and teams of two, one developer, one designer for new features. How do you handle A, bugs and B, smaller tasks or tasks or even features that are standalone but take between a few hours and say two weeks. For example, are those also taken up by teams of two if they include a design component or do you use kanban or you, do you just have a dedicated person on standby or no other planning for those? I don't think we have a lot of people just standing around waiting for work.

Jason (19:10):

Well there's a few different degrees of this one so I think people get confused and it must be our fault cuz we're not clear about this. Not every project takes six weeks. Six weeks is the maximum amount you can spend on any given chunk of work or a feature or whatever this is. Many projects take a week, three weeks, four weeks, a day, right? It sort of depends. And so when we assign work initially we're like, this is a six weeker, this is a two weeker, this is a four weeker. These are, these are the appetites as David talked about in a different question, uh, or answer. These are appetites, they're not estimates. We're not saying we think this will take two weeks. We're saying we're gonna give it two weeks now we gotta figure out how to make it work in two weeks. So there are shorter ones and um, sometimes these are two-person team features.

(19:56):

Sometimes they're one feature, one person features. Um, there might be some couple day features that a designer works on solo and a couple day features that a programmer works on solo. There's just, again, it's a give and take. It's a mix. You kind of figure it out as you go. You don't put two people on something that just needs one person. You know, you, you just figure it out, right? So there's that. That's the planned work. That's all the stuff that we're expecting to do. And then there's reactive work which is different side of this which is uh, stuff that you know, it could be infrastructure based, it could be uh, performance issues. There could be bugs that pop up from a previous release. There could be uh, new bugs that just pop up that there's always bugs. There's always a list of things perhaps that need to be dealt with.

(20:38):

We have an on-call team that works on some of these things. Sometimes in the down times between cycles, someone might pick off a few bugs that they wanna solve, they wanna fix that have been bothering them or bothering customers. Sometimes some of these things can be handled by someone on support depending on what they are. If it's not really a bug necessarily but it's an issue a customer is confused about or doesn't understand or something, you just kind of figure that out. But there are people that are dealing with reactive work here that are not dealing with the proactive work. So proactive work is a scheduled work. Reactive work is the stuff that bubbles up and comes up and there are teams and procedures in place for that. What we don't do is stop everything when there's a bug, unless it's a critical code red kind of thing where like there's data loss or something significant, right? It's what we just don't say, okay we have to fix every bug until we can move on to something else. We accept that some bugs are going to exist, they're gonna be okay, they're not critical. They might affect a small portion of the customer base. We might know we wanna deal with them but we don't have time now we'll get to 'em later. So it's this constant negotiation and renegotiation of what's worth doing. But yes, two kinds of work and two different structures for how to handle those kinds of projects.

David (21:49):

Another set of techniques we've used occasionally have been bundling. So we know there is for example some bugs that we haven't been able to get to during the on-call time or during cool down. But we really do occasionally wanna do a spring cleaning. So sometimes we literally do it in the spring and we call it a spring cleaning and we give someone, hey here's a three, four week appetite for you to use to work on the list of issues that otherwise aren't bubbling up to the criticality that would stop work, and they're too big to be handled in the reactive time. We've also commonly done this in December. December is always sort of a slow mode month anyway because we have Christmas and holidays and so forth and it actually lines up that there's a bit of slack when we do six cycles a year.

(22:34):

It doesn't exactly line up. There's a few weeks left over and we quite often use those weeks that are leftover for what we'd like to call roaming time. And that roaming time is, hey pick up on the issues that you really wish you'd gotten to but just aren't that critical. Um, that's a really great way of doing it. But I think as Jason says, the important thing is that you can't make the Shape Up method work if you're constantly interrupting people. The people who are working on a feature, when we say hey our appetite is six weeks, that usually means it's ambitious. That usually means it requires some cuts. If you're then bugging them multiple times a week with other stuff, even though it is quote unquote important, they're just not gonna, it's not gonna work. They're not gonna be able to get into the groove, they're not gonna be able to deliver on the appetite that you set out and they're gonna get frustrated.

(23:25):

People don't like signing up for a mission that's just doomed to fail because they can't find the time to actually get work done. So the on-call is the main release valve we have these days. We have two people on on-call that's just a function of our size. Uh, in the early days we used to just have a single person on-call and in the even earlier days you again don't have this at all. This is a technique you introduce once you have more issues and and they kind of pop up. But you have to use all these kind of tricks, techniques, approaches at the same time they're sort of interlocking. You can't just say, well we're gonna work on this for six weeks and then if a critical bug comes up, too bad customer's gonna have to wait weeks. Yeah, no, there's no customer who's gonna be willing to wait while their data is corrupted or they can't access it or whatever.

(24:11):

Um, if it's truly atrocious, as Jason says, we have a two-tier code system, we have code red which is for the whole app is down or materially down or there's another major major issue that's where people are will literally get woken up in the middle of the night and then that's rare. Very rare that we have those issues. More common is code yellow. Code yellow is usually for people who are dealing with their own stuff. They have to clean up. Say you are a person who worked on a feature last cycle, you pushed it out then into the next cycle, you're already onto the next thing. There's a kind of material issue, it's not a totally drop everything issue but you're, it's kind of your fault. And that's not like a blame thing in the grand scheme of thing. It's just like no, why should someone else clean up your shit?

(24:58):

Just like hey clean up your own shit and you will eventually learn that, like you'll have less of that if you keep it more tidy. So code yellow is essentially a permission to stop other work and that's why we call it a code yellow and we have a little ceremony and we post a little message and it has this template like oh this is what happened and this is working on it and you can call me here and and whatever. So said we kind of want to give the impression that this shouldn't be happening all the time. It will inevitably happen. Everyone pushes stuff out that it will lead to a code yellow sometimes. It should be not so common, but if it's needed it's there. We're not gonna be militant about like you can't return to previous work until six weeks have passed if it's kind of important.

Jason (25:41):

And David, do you wanna quickly give a primer for how the on-call rotation works?

David (25:45):

Sure.

Jason (25:46):

Is it just two people that are always on on-call or people rotating people are always curious about that?

David (25:51):

Yeah, that's a good question. So , this is one of those processes where I think we've tried about 400 different models over the years. We tried to have an on-call that was like you on once a day, then we tried an on-call where you were on for a whole cycle six weeks. Then we tried an on-call where you were on for two weeks. We tried having on-call with one person, with two people. What we've ended up with is that we now have enough programmers that you can be on call one week out of every cycle, which is a really nice cadence and you get to be on call with a buddy. So there's two people on call and they will work one week together on dealing with the issues and then you'll pass it on to the next team. And the way we set this up is a little bit like the other answer is, you know in advance when you kick off a cycle, who's gonna be in, who's gonna be out. Now occasionally you'll have sickness or something to, to swap and fill in with, but vacation for example, we can schedule around that.

(26:45):

So we set the on-call schedule a cycle in the bands. At the end of a cycle we go like, okay, who's in who's out? Line it all up, mix it up. It's also a great way to work with someone. When you have an on-call buddy you're usually sharing problems and it's a way for people who don't perhaps work together all the time from different teams to work together on this ad hoc team and learn from each other. So I think it's a good model. We've certainly have, we have some people who like it more than others. Let's just put it like that. Um, not everyone loves the on-call rhythm, which at times can be kind of slow and then other times kind of hectic and it does mean you're dealing with perhaps five or 10 different problems in a given day versus our normal cadence of work is very much, hey you just get to go into your zone, work on a problem for long stretches of uninterrupted time. This is sort of the opposite of that.

Kimberly (27:37):

Okay, one last thing before we wrap up because it, I think it goes along with this listener's question. I feel like we talk a lot about these six week cycles, but we don't talk a lot about the cool down. David, do you wanna talk about that kind of period of time? Because I think that bugs and smaller tasks sometimes happen during that cool down, but I don't think we really tell people about that.

David (27:55):

Yeah, I think the cool down is actually really important. It's a crucial part of Shape Up and it came about because we didn't used to have it. What we used to do was we used to bookend major feature work right up against each other. You would just ship a major feature and then bam, Monday you're onto the next thing. And there's just something about that. It's almost like that awful word from scrum, sprints. Like if you're constantly sprinting, when are you catching your breath, when are you recharging? When are you just going like, okay, can you give me a minute here to just like collect my thoughts before I jump into the next thing. I actually think cool down, the investment if you will, of two weeks, six times a year where we let people roam. Again, there's that word and the word really means you get to choose, here's two weeks.

(28:48):

It's not that you're like playing games. It's not that you're not at work, you're absolutely at work, but the work is self-directed. And what I've found is that the vast majority, well all of the programmers and all of the designers, they always have stuff on their little lists. They're little own list of things they'd like to fix. Sometimes it's a bug that's not that critical, but they just are annoyed about sometimes it's a refactoring that means we're changing how the code is made or the design is made in a way that doesn't change how the product works, but just makes it cleaner on the inside. That's really important to tend to that. That is a key sort of gardening technique to ensure that okay, for next season we won't have a bunch of weeds. We suddenly have to stop all work to take care of because we've been kind of doing it along the way.

(29:36):

So the cool down gives people time to do that. Occasionally we were diluting ourselves a little bit, we're calling it cool down even for people who were working on these six week feature teams and now we've actually revised, I don't know if we've done that in Shape Up, I don't think we've done on the online thing. Maybe we've written about it. Now we have sort of two modes. We have cool down for someone who haven't just worked on one of those most ambitious projects that take six weeks. If you worked on like three different things that were two weeks each, cool, you're ready for cool down. If you're on a six weeker, what often happens is the last week of the six weeks, you're almost done. You're like 95% done. That's just human nature. If it's at five weeks, you would've been almost done at the end of the fifth week, but we say six weeks, you're almost done but not quite.

(30:22):

There's a little bit of polish. So now it's cool down slash overrun. And overrun is to give yourself sort of a buffer. It's kind of like an an engine. It can have a, a soft limiter where you hit the the red line and the engine doesn't blow up because it actually has 500 RPMs in spare before things start going nuclear, right? So we leave those two weeks in as that overrun is the 500 RPM extra, knowing that occasionally it'll go over without its spilling into the next cycle. Now what's key there is that if someone just keeps doing overrun cycle after cycle after cycle, well then there's no cool down. So what we also try to do is swap it around. Someone who just worked on an ambitious six week project that had overrun. Do you know what? They shouldn't work on that next cycle. They should preferably work on two, three weekers or a three weeker and a one weeker. What's also key here is we don't actually try to schedule a solid six weeks for every single person who's on the team. We usually leave a bit of slack. That allows us to deal with sickness that allows us to deal with other issues that come up. So what often happens is we have something like two thirds or three quarters of the time technically available on a Calendar Tetris game scheduled and then the rest we leave open.

Kimberly (31:41):

Okay, well with that we're gonna wrap up this, ask me anything. But again, if you have a question for Jason or David hit us up, let us know. You can leave us a voicemail at 708-628-7850 or you can send us an email at rework 37 signals.com. People have been YouTube-ing us, LinkedIn'ing us, tweeting at us. Email or voicemail is the best way. Rework is a production of 37signals. You can find show notes and transcripts on our website at 37signals.com/podcast. You can also find us on Twitter at rework podcast.